Predictable Verification: "Insights from Bugs"

- r1raider

- Mar 12, 2024

- 12 min read

Updated: Apr 10, 2024

Introduction

Tracking and logging bugs is sometimes regarded as a chore and an overhead for enthusiastic hardware development teams who are eager to deliver working products to market as quicky as possible. This is not an unreasonable concern. Anything that slows down the development process where the benefits of the process are unclear, will quickly fall aside when the team is under pressure to meet delivery schedules. Not meeting those schedules can lead to missing the market and missing the ROI goals.

However, in this article we intend to convince you that logging and tracking bugs will eventually increase productivity, product quality and reduce downstream costs from rework or consequential losses from reputational or opportunity impacts. You’ve heard us talking about data and analytics for hardware product development multiple times now, but we are going to explain why bug data is one of the richest datasets.

Our key point is that bugs are a fantastic source of learning,

and that high quality bug datasets yield important insights into your product and your development processes which, if acted upon, can lead to tangible improvements in ROI over the course of multiple projects. It’s often said that mistakes are the best form of learning and bugs are usually a manifestation of some sort of mistake. If you capture each of those learning points and implement them, no matter how minor some of them may be, each one is an opportunity for a marginal gain.

Marginal gains can win the race; ask any F1 team boss.

Our objective here is to review some of the challenges around bug tracking practices, which can be very varied from team to team, and set that against the benefits of having good bug data.

All data illustrated in this article has been synthetically generated by Silicon Insights Limited.

Bug Management Motivations

Bug logging is the practice of capturing a bug at the time of the bug event. A bug is entered into your bug tracking system along with key bits of useful information.

Bug tracking is the practice of tracking and updating the bug data through a bug workflow from initial discovery and triage, to debug and analysis, fixing the problem, fix verification, bug root cause analysis, and finally closure.

Bug logging and bug tracking fulfill 3 main purposes of roughly equal importance as we see it: -

1. Communication and Task Tracking

2. Progress Measurement and Sign-off

3. Continuous Improvement

Communication and task tracking

It’s usually the case that there is more than one person in the development team, and it is normal good practice to have independence between the verification engineering activities and the design activities. Hence some level of documented communication between verification and design is desirable. Small teams may believe that this is not needed; engineers may be seated across from one another in the same office space, but these days that is more often not the case. So, it is important to communicate bug details, which are often complex, using a bug tracking platform.

It is not forgivable for bugs to be overlooked or accidently dropped because the relevant parties assumed they could manage the details in their heads.

This is just basic task tracking. No different to the tracking of other product development tasks and sub-tasks that ensure that nothing gets missed and everything gets assigned to team members and followed through to completion. Commonly, the bug reporter will be the verification engineer that discovered the problem, and the bug assignee will be the design engineer who will need to investigate and debug the issue at an RTL code level, and subsequently deploy and test the fix. It is understandable when the reporter and the assignee are the same person, and the issue is minor, to quickly fix the issue without recording it. There is no communication issue, but there could be a time lag leading to an overlook, and the issue will not be accounted for in any future analytics.

The other question that comes up frequently when deploying bug tracking processes, is from when do we need to start capturing bugs? Also, a fair question. Our recommendation would be to ensure that as a minimum, full bug tracking occurs from the point where the design code is considered to be feature-complete, i.e. we are testing code more than writing new code. Different modules of the design may achieve feature-complete at different points so they will start full bug tracking at different times. This could be the alpha or beta milestones or whatever you have called your key development milestones.

Progress Measurement and Sign-off

All product development projects track and report on key progress metrics throughout the development process. This is normal project tracking. Bugs is one of those key project metrics. Interpreting bug curves using dimensions such as open v closed, severity, bugs by design module, bugs by methodology, is in part a project management activity and in part an engineering lead activity. These progress curves are particularly significant at the various milestone sign-off points. What is the gradient of the curve at these points and what does that tell us about the level of completion of the various verification activities? It is generally useful to cross-correlate the bug curves with other metrics such as coverage, test pass/fail rates, code churn etc. For example, if your cumulative bugs curve has plateaued, you need to consider the meaning of that in the context of the volume of testing that is going on. Stopping running regressions is one way to flatten your bug curve!

Figure 1: Cumulative Bug curve over product development lifecycle.

As you approach the final release milestone it would be desirable to see some stabilization in the bug rate. How much more testing is required, with no new bugs found, is a key project risk decision. Experience from past projects can guide teams on this, but as a rule,

significant ongoing bug rate right up to the release milestone is a red flag for post-release quality.

It is highly probable that post-release bugs will be a problem.

Continuous Improvement

The final and important motivator for good quality bug tracking is in the use of bug data for analytics and the potential to extract valuable insights from the data that can be exploited as continuous improvements (or marginal gains).

For this to be successful you need good quality and rich bug datasets.

Discovery data fields are highly useful when it comes to analytics. Some examples of the insight questions that you might want answers to are as follows:

· Which methodologies are the most effective at finding bugs? (Understanding this helps to optimize the blend of methodologies.)

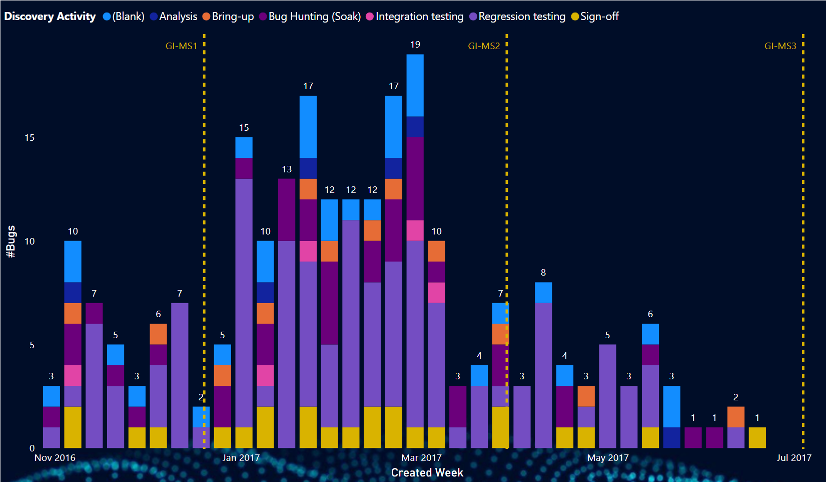

Figure 2 : Bugs per week by Methodology.

· Which verification payloads (or stimulus) are the most effective at finding bugs? (Understanding this helps to optimize the verification tests or payloads.)

· Which checkers are the most effective at finding bugs? (Understanding this helps to optimize the use of checkers.)

· What verification activities find the most bugs? e.g. regression testing, continuous integration testing, sign-off testing, coverage analysis and closure, code reviewing, etc. (Understanding this helps to understand the cost-benefit of different activities such as coverage-closure for example.)

Figure 3 : Bugs per week by Activity.

Which design modules have the highest and lowest bug densities? (Understanding this helps the design team to identify bug hot spots in the design that may be due to complexity, code stability, or poor code structure/style. In turn this might direct efforts to review and refactor design units or point toward areas where verification intensity needs to be increased). See example data in Figure 3 below.

Figure 4 : Bugs per week by Design Unit.

These types of valuable insights can help a project to optimize its’ development workflow efficiencies and effectiveness but can also serve to refine product development best practices to benefit future projects. This philosophy of “continuous improvement” ensures that ROI is protected while product quality is always improving.

Best practice

To achieve the 3 goals of bug management above,

the first step is you must capture bug data.

If teams are not consistently capturing the data, or the data is patchy, then you will fall short on all counts. Quality of data is critical here, and that means you need a motivated team that have a “bug tracking culture”. It’s just one of the day-to-day practices and everybody is doing it to roughly the same standard. The standard is what the project leads have agreed and established with the team, and there is no excuse for not complying with it, just like other project level standards such as coding rules, code reviewing, sign-off, version control, test planning, etc.

Also, no one is judged by the number of bugs.

There is no shame in entering a bug; teams and individuals are not measured in bugs, at least not for bugs found pre-release or pre-silicon. Bugs found after release or when the product is in silicon are a different matter as they really do act as leading indicators of overall product quality. We will refer to these post-release bugs as bug-escapes or more formally as “product errata”.

Find as many bugs as you like pre-release, the more the merrier,

but don’t miss critical bugs that will have a downstream impact on the customer, rework costs, reputation, and potentially divert your best people from working on the next project in your roadmap to bring in future revenue. This is a key challenge for verification. We have discussed this before in “The Dilemmas of Hardware Verification” and also in “The Cost of Bugs”.

It’s important to get this bug culture right for effective product development teams. It’s a leadership question. It must come from the top down. When new people join your team, they don’t need to question it. It’s just the “way we do things around here”.

So, what are we identifying as “best practice”? Best practice includes some of the following:

Decide and standardize on one bug tracking platform. Mining data across multiple platforms can be difficult. It’s more important to choose one system, than to debate the relative merits of one tool versus another.

Open a bug as soon as you see something that smells like a bug – don’t wait until you have 100% characterized and debugged the problem. If it turns out to be a non-bug, that’s fine, update the bug to “rejected” or “not a bug”. This will ensure that there is transparency and communication for ongoing bug investigations. It does not necessitate heavyweight data entry at this stage. It’s not unknown for previously rejected bugs to be encountered again, with a different outcome.

When a bug is confirmed, enter ALL the bug data fields as this will be needed later for analytics. This is the minimum, but don’t just do the minimum. Think about what information is relevant and will be useful in a few weeks’ time, a few months, or a few years. Whenever you come back to look at this bug in the future, or someone unfamiliar with the bug is trying to understand it, there should be sufficient information. Don’t rely on the bug author being available or being able to remember these details in the future.

Move bugs through the bug workflow, don’t leave them in the open/unresolved state forever. Bug progress analytics or dashboards can help with this, and it usually requires a project lead or a project manager to monitor the open bug backlog to ensure that progress is being made.

Review bugs. Nobody in the team wants the job of the “bug police”, but you do need one or more champions of good practice to coach others through good bug tracking practices and to periodically scrutinize and correct the data quality. Maybe this is a role you can rotate through the team, but you ideally do need one or more “champions” of good practice who are well respected and able to influence team behaviors positively.

Collect bugs for the design, but also for supporting functions such as verification, specifications, reference models etc. The verification environment can sometimes be as big and as complex as the design code itself. A design bug can be caused by a verification environment bug (the design was incorrectly made to fit the verification environment behavior), or simply be an error in the verification code. These classes of bugs also need to be tracked, progressed, and fixed.

Don’t get too hung up on “but is it really a bug?”. If it’s a problem in the design that needs to be fixed, then log it as a bug, no matter how it presents itself. For example, some design bugs don’t cause obvious functional errors, but they might present as performance or power issues, or simply things that work but are non-ideal and should really be cleaned up or re-factored to ensure the design is robust. It might be a potential “vulnerability” which has not been observed but is theoretically reachable. Many designs accumulate “technical debt” as the code is patched to fix bugs upon bugs.

Capture root causes. These are pertinent learning points which might occur to you at the time of the bug but will be harder to identify later. If such data is captured in the bug tracking system, it will be gold-dust for future analysis where it may be possible to see clear trends in root causes that point towards something that can be addressed with a view to future bug avoidance.

Adoption barriers

Many of the adoption barriers are the people, culture, leadership ones. If teams don’t “see the point” in the data collection overhead, then they have not understood the cost-benefit nor the value of the future analytics insights that will be possible. History and precedence can also play a part. Tooling should not be a barrier as there are many good off-the-shelf solutions available, both open source and paid-for tools. Customization may be required to adapt the tools to your chosen bug schema and bug workflow.

A well designed and implemented schema can make a big difference to the overall user experience when entering and tracking bugs.

You will need someone to own and maintain that. If there are user issues with your flow, address them quickly, because the negative productivity impact will have a negative effect on your team’s engagement and support for the bug data collection.

Workflow automation

Most teams will be either using external workflow automation tools (commercial or open source), or they will have built their own internal tools. These workflows can and should be integrated with your bug tracking workflow if possible. Assuming your bug tracker provides a REST API for example, you can automate the “new bug” step into a button click and have your regression management tools link bugs and regressions. This lowers the barrier to opening a new bug. This might require your bug schema to incorporate a “regression tag” of some sort, but this linking of bugs to other workflows really helps when it comes to future data mining activities.

Bugs and customers

As previously mentioned, customers probably don’t need to see the full details of all the development bugs you are tracking.

They do care about the post-release bug rate however, as these are bugs that may impact them directly and have consequential costs and impacts.

The quality of the delivered product is a direct correlation to the post-release bug rate, or errata as we are referring to them here. For some projects, there may be a higher level of customer engagement through the development phase leading to the customer requiring some visibility of bug progress data. They might not need to see the details of each bug case, nor remote access to the project bugs database, but they are looking from some assurance that the project is progressing to plan because their own deployment plans are sensitive to delivery delays or quality issues.

When it comes to errata reporting, the onus is on the product developer to communicate adequate details in a timely manner. Failure to communicate can lead to greater dissatisfaction than that incurred from the impact of the errata. The customer’s communication needs are different from the internal development teams. They may not need to understand all the details of the internal design leading to the erratum, but they do need to understand the following clearly:

The precise conditions required to observe the bug.

The effect of the bug when triggered – is it a lock-up, corruption, performance degradation etc.

Any viable workarounds that are available to them with details of any impacts incurred by the workarounds.

The plan to fix the erratum.

In addition, depending on the severity of the erratum and the nature of the target system, they may need root cause analysis details. For example, an automotive customer that is concerned with functional safety, might require a full 8D analysis of root causes and corrective actions.

How the business manages errata communications to customers has a big impact on the customers experience and the reputation of your company.

Conclusion

If you want to ensure that all bugs are being actioned and nothing is being missed by the team, then bug tracking is required to track all bugs as tasks. If you want to understand what the bug curve gradient is when you are making your product delivery releases, again bug tracking with some basic bug analytics is required. If you want to know how to improve your product quality, reduce bugs, and deliver earlier with lower development costs, then you must analyze the efficiency and effectiveness of your product development workflows using bug data.

Complete bug datasets are the key enabler to meaningful bug analytics.

Clearly bug logging and bug tracking are short-term productivity overheads to the product development process so care must be taken to implement the right-sized schemas and processes to enable efficient data capture. A high-quality bug dataset is one of the most valuable datasets for a complex hardware product development.

We commend bug tracking to you and your teams.

If you would like to discuss any of these ideas in more detail, including how to establish effective bug schemas, and how to perform insightful analytics on bug datasets and other datasets, then please contact us. We would love to help your team establish bug tracking best practices and learn how to exploit this valuable data source fully.

Copyright © 2024 SIlicon Insights Ltd. All rights reserved.

Comments